Deep dive into Virtual Production in Unreal Engine

Virtual production is changing the face of storymaking across the media and entertainment industry. It is reshaping how films, shows and live events are made. Blending traditional techniques with digital innovations that leverage real-time game engines, virtual production impacts everyone from producers and directors to stunt coordinators and grips. While it is revolutionising the industry, questions remain about what it refers to specifically. In this article, we will take a deep dive into Virtual Production and the role Unreal Engine plays in this evolving field.

What is Virtual Production?

Virtual production joins the digital world with the physical world in real time, blending traditional filmmaking methods with modern technology. It helps creatives bring their visions to life. The broadcast industry has used virtual production for years to produce real-time graphics during live sports and election coverage, where input data is constantly changing, requiring graphics to update on the fly.

Nowadays, technological advances, particularly real-time game engines like Unreal Engine, mean high-fidelity photorealistic or stylized real-time graphics are integral to live broadcasts, animation and every stage of the live-action film production process before, during, and after principal photography happens on set.

Types of virtual production

Virtual production includes previs, pitchvis, techvis, stuntvis ( action design), postvis, and live compositing (Simulcam). It also involves virtual scouting, VR location scouting, and in-camera VFX (on-set virtual production). We will look more into these terms in the next section.

Differences between virtual production and traditional film production

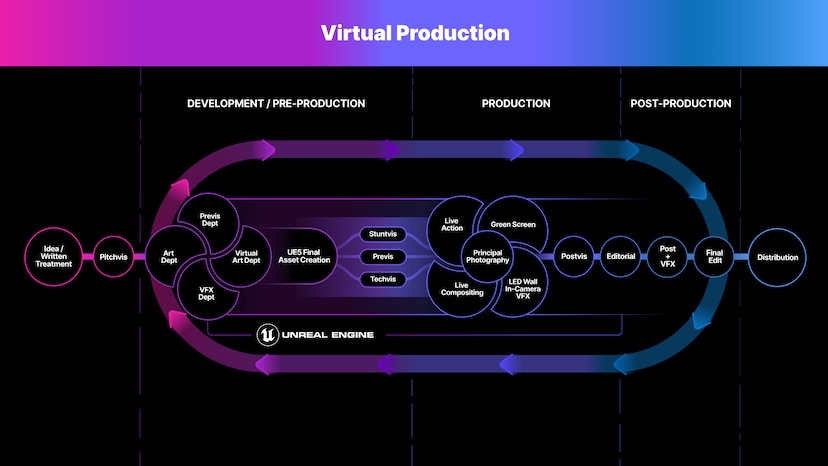

Traditional film production follows a linear process moving from pre-production, (concepting and planning), into production (filming), and finally to post-production (editing, color grading, and visual effects).

The downside of this traditional method is we cannot see how all the elements come together until the very end. Thus, making changes is time-consuming and costly, sometimes necessitating a complete restart. As a consequence, filmmakers’ ability to make creative decisions during production is quite limited.

By contrast, virtual production blurs the lines between pre-production and the final result. It allows producers, cinematographers, and directors to see a representation of the complete look much earlier. This improves their ability to iterate quickly and economically. As a result, they can refine the narrative according to their creative vision. This ultimately supports telling better stories while also saving time and costs simultaneously.

Using virtual production for visualization

Virtual production helps filmmakers visualize different aspects of their film before, during, and after the production phase, covering both live-action elements and visual effects or animated content. These visualization processes include:

-

- Previs

- Pitchvis

- Techvis

- Stuntvis (action design)

- Postvis

- Live compositing (Simulcam)

Let’s explore each phase in detail:

Previs

Previs, short for previsualization, is the next step from hand-drawn storyboards.

It means using computer-generated imagery (CGI) to rough out key visuals and action before shooting to define the visual translation of the script and experiment with different scenarios. In action scenes with minimal dialogue, previs also details the narrative elements that propel the story forward.

Previs acts as a reference for what needs to be shot live. It provides the basis for VFX bidding, where studios compete against each other for the contract to complete some or all of the shots.

In the past, previs employed offline software to quickly render lower-resolution characters and assets before combining them together. However, modern game engines like Unreal Engine can now render full-resolution, photorealistic assets in real time. This allows filmmakers to see their vision realized more vividly. They can also interactively refine elements on the fly and consequently make better choices.

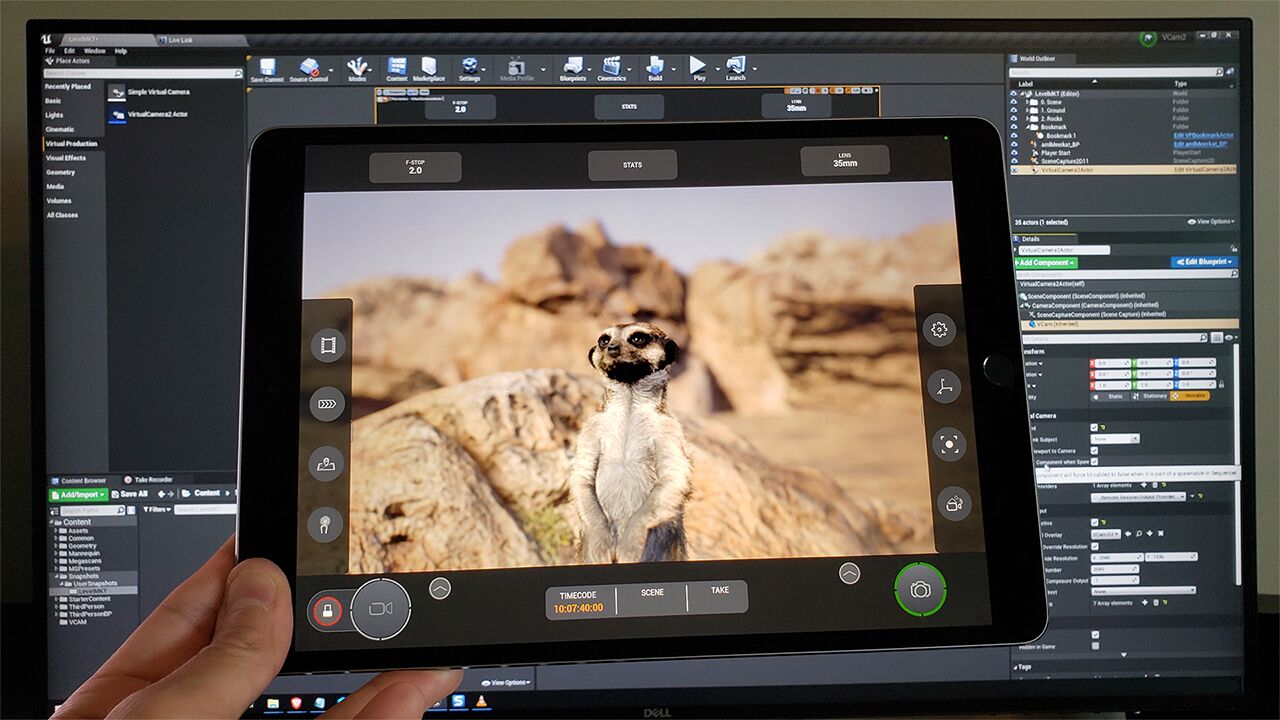

Previs, like several other virtual production stages, may use virtual cameras (or VCams). VCams allow filmmakers to control a camera inside Unreal Engine from an external device, commonly an iPad, recording camera movements. The resulting camera moves can serve as starting points for manual refinement during blocking. They also provide depth-of-field previews to determine live-action camera settings. Some VCams even generate final cameras taken the way through post-production.

Many directors prefer interacting tactilely through a physical device over software interfaces. They find it more familiar and it results in more realistic camera moves in the digital world.

Pitchvis

Pitchvis refers to previs that usually happens before a project receives approval. Its purpose is to obtain stakeholder support by demonstrating the expected visual look and tone of a project before a main investment is committed.

You can watch an indie filmmaker – HaZ Dulull’ thoughts on using Unreal for pitchvis below.

Techvis

Techvis is used to carefully define the technical specifications of practical and digital shots before committing equipment, crew and resources for a production day. Techvis can be used to explore the feasibility of shot designs within the confines of a specific location, such as where the crane will go, or if it will fit on the set, and blocking involving virtual elements as well. Also, it can be used to determine how much of a physical set is needed versus digital set extensions.

While Previs uses higher-quality assets for creative decisions, Techvis often uses more basic assets because its objective is practical planning, not creative decisions. Its true value is providing practical information that the crew rely on to make the shoot more efficient.

Stuntvis (Action design)

Stuntvis, also called action design, blends elements of previs and techvis for physical stunt work and action sequences. It requires great precision so action designers can choreograph shots with accuracy, ensure creative approval, and maintain high safety for stunt performers.

Unreal Engine incorporates real-world physics simulation, enabling stunt teams to rehearse and perform simulated shots mirroring reality closely. This considerably improves production efficiency through fewer shooting days.

Let’s see this presentation from PROXi Virtual Production founders Guy and Harrison Norris to learn how industry-leading Unreal techniques are applied to action design and techvis for movies.

Postvis

Postvis occurs after the physical shoot has finished. It is used where a shot will have visual effects that are added to the live-action footage, but those are not complete. Usually, these shots have a green-screen element replaced by CGI in the final product. Postvis often reuses original previs of VFX elements combined with real footage.

Postvis conveys the directors’ vision to visual effects teams. It also provides editors with a more accurate version of pending VFX shots for cutting. Postvis provides filmmakers with a way to evaluate edits with visual reference, even when final VFX effects are far from finished. This allows the directors to gain approval from all involved parties on the direction of the cut. It can even be used to make early test screenings more representative of the intended final product.

Live compositing (Simulcam)

Live compositing is similar to postvis in combining live-action footage with a representation of CGI elements as a reference. However, this occurs during the shoot instead of afterwards, unfortunately without the name “duringvis”. With Live compositing, the director can see CG elements composited over live action while they are shooting, thus, they get a better sense of what is being captured.

Live compositing is typically used to visualize CG characters driven by performance capture data, which can be live, prerecorded or blended. Performance capture is a form of motion capture where actors’ performances are captured by specialized systems and can be transferred onto CG characters afterwards.

Virtual scouting

Virtual scouting represents another facet of virtual production. This powerful tool allows key creatives such as the director, production designer, and cinematographer to review a virtual location using virtual reality (VR). Immersing themselves fully in the digital set provides a real sense of its scale. Virtual scouting can help teams in designing particularly demanding sets and choreographing complex scenes.

Another use of virtual scouting is set dressing, allowing teams to rearrange digital assets while experiencing the surroundings at a life-size scale.

Virtual scouting sessions frequently foster collaboration as multiple stakeholders jointly review in real time. Together they explore the set and identify, and bookmark areas of particular interest for filming particular scenes. Participants may also call out which props require physical builds versus remaining virtual.

VR location scouting

VR location scouting is an extension of virtual scouting. Here, the set is a real place. One member may use photogrammetry tools like RealityCapture to capture the scene. Then other members remotely review it via virtual scouting in Unreal Engine. This location VR scouting saves much time and travel money compared to in-person visits, especially when choosing among multiple locations.

In-camera VFX (ICVFX)

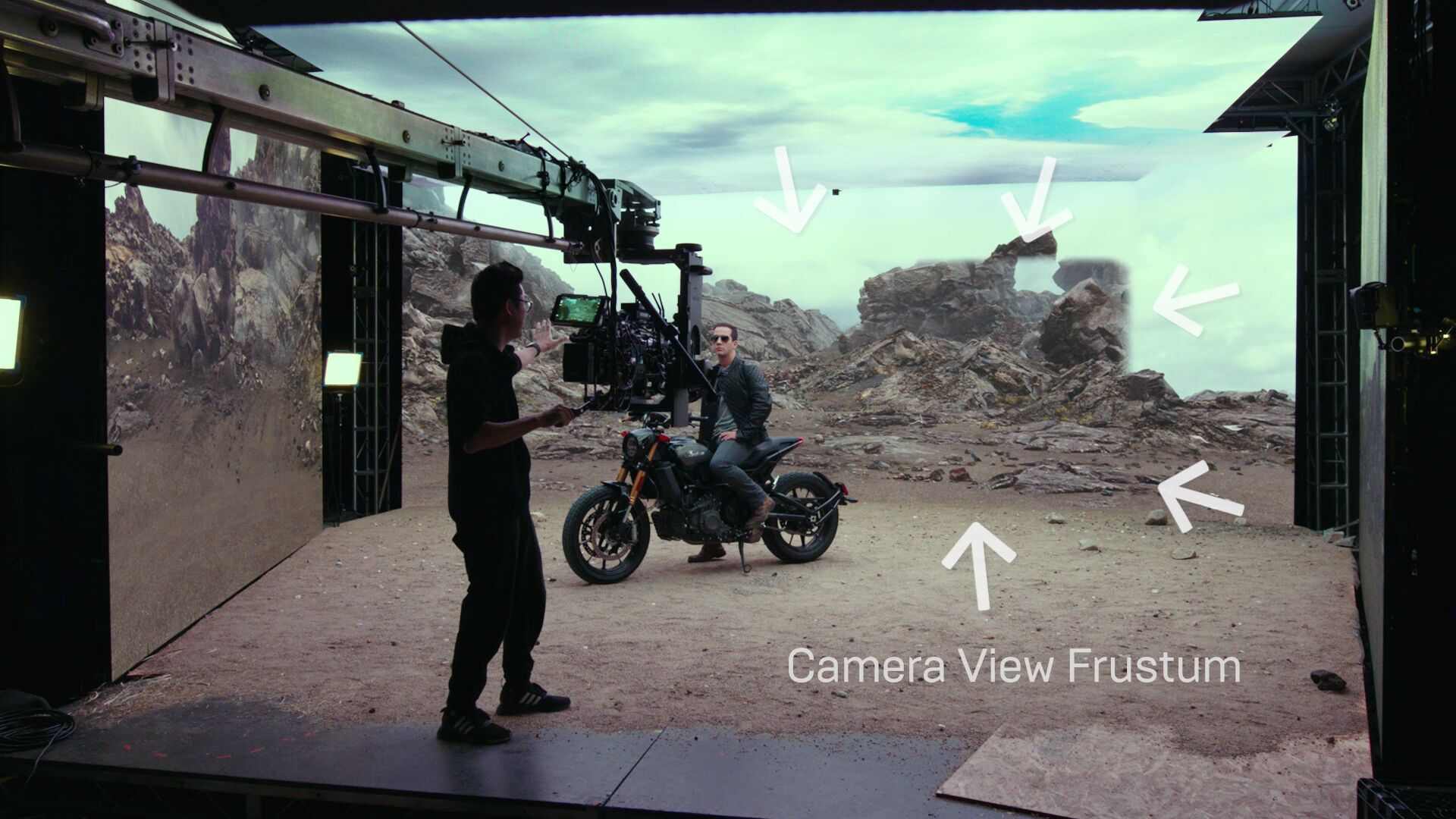

In-camera VFX, sometimes called on-set virtual production or OSVP, is one form of virtual production. With this technique, live-action shooting takes place within a huge LED stage or volume, displaying an environment rendered in real-time by the engine across its walls and ceiling. The most sophisticated LED volumes are hemispherical domes fully enclosing the stage, while simpler stages may resemble half cubes or even a single wall.

It goes beyond background replacement as the virtual scene interacts live following camera movements to create a true-to-life parallax effect, making everything seem correctly placed in perspective. LED displays also cast realistic lighting and reflect off actors, immersing them entirely in the scene.

Benefits of in-camera VFX

In traditional production, live-action footage will eventually be combined with CGI. Actors are shot against a green-screen background, which can later be replaced with digital elements in post-production. The actors and director have to imagine the final environment. While, with on-set virtual production, the cast and crew are working in context, which is easier to deliver convincing performances and make better creative decisions.

In other instances, in-camera VFX means that shots that are initially immediately complex would need small amounts of post-production only. Otherwise, shots viewed as quite difficult would require less time to finish in post compared to traditional methods.

A big advantage of LED stage work is the simple control over lighting and time of day – no further delays waiting for ideal natural lighting conditions. Continuity is also easier as scenes can be revisited in precisely the same state previously left, within minutes rather than hours.

Unreal Engine provides a fully-featured set of tools to support in-camera virtual production. Below you can see examples of innovative filmmakers experimenting with and demonstrating its capabilities for supporting ICVFX.

We hope you enjoy this overview of virtual production and find it easier to understand its visualization processes.

iRender - The best render farm for Unreal Engine rendering

iRender provides powerful render machines supporting all Unreal Engine (with all plugins) versions. Our GPU render farm houses the most robust machines of RTX4090/RTX 3090, AMD Threadripper Pro CPUs, 256GB RAM and 2TB SSD storage to boost rendering Unreal Engine projects of any scale. Check out our Unreal Engine GPU Cloud Rendering service to have more references and find the best plan for your projects.

iRender provides powerful render machines supporting all Unreal Engine (with all plugins) versions. Our GPU render farm houses the most robust machines of RTX4090/RTX 3090, AMD Threadripper Pro CPUs, 256GB RAM and 2TB SSD storage to boost rendering Unreal Engine projects of any scale. Check out our Unreal Engine GPU Cloud Rendering service to have more references and find the best plan for your projects.

Reference sources: unrealengine.com

Related Posts

The latest creative news from Unreal Engine Cloud rendering.