How much VRAM do you need for 3D rendering?

When building a PC for rendering, the VRAM requirements of your GPU can vary depending on the software you use as well as your project complexity. Selecting a GPU with sufficient VRAM amount is essential for efficient 3D rendering since the VRAM directly impacts how complex rendering projects can be handled. In this article, we will look closer at VRAM and go through the VRAM demands of popular rendering workloads.

What’s VRAM?

VRAM (Video Random Access Memory) is high-speed memory located on your graphics card (GPU). VRAM is one component of a larger memory subsystem that serves as fast, temporary storage for the graphics processor located on your GPU to make sure your GPU has access to the data it needs to process and display images smoothly.

Source: Nvidia

Before the GPU can process a single frame, VRAM holds all the models, textures, geometries, and lighting maps ready for the graphics processor to use when rendering that frame. After the rendering is done, the GPU will store the finished frame in the VRAM as a frame buffer. This framebuffer is then sent to the video display to output the final image on your monitor.

Rendering just means the processing of various graphical computations that produce a visual end-result when combined together. And the GPU performs a bunch of calculations that use the data stored in the VRAM.

To simplify the process, when rendering a visual image in a 3D rendering software that can be displayed on a monitor, this is generally what happens:

-

- First, the scene data like textures, polygons, animations, and lights is loaded from the Mass Storage into the VRAM of your GPU.

- Then, the GPU will trace rays through each pixel on the screen.

- When a ray hits a surface, the GPU looks up in the VRAM to determine what polygons, lights, and textures are associated with that particular pixel.

- Once the GPU has finished checking all pixels for that single frame, the frame is complete and can be stored back in the VRAM again.

- Finally, the finished rendered frame is displayed on the monitor or saved onto the storage disk.

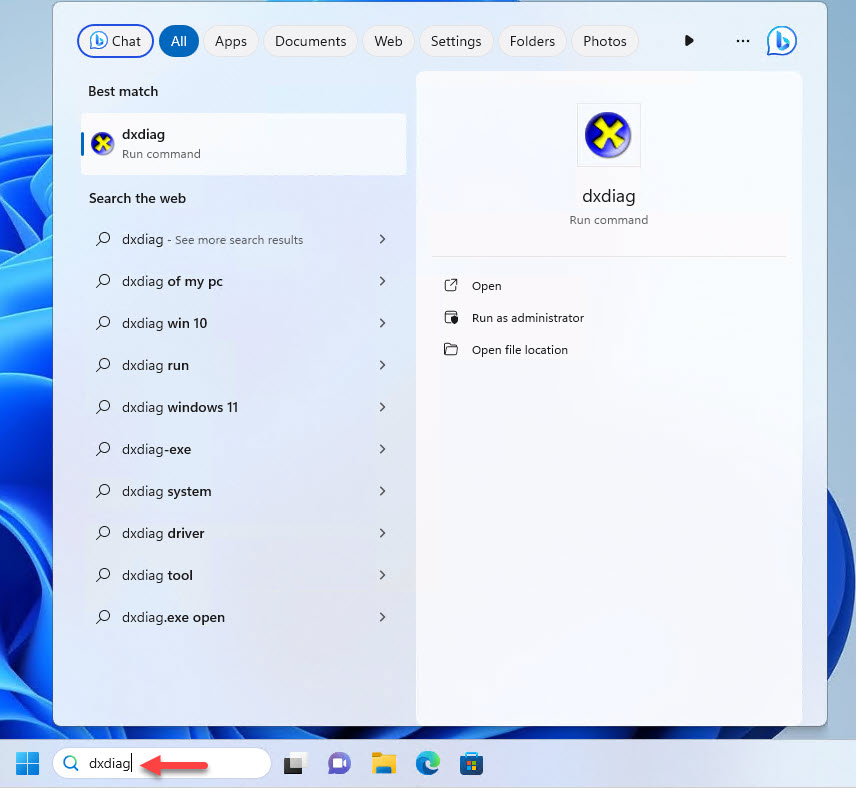

How to check VRAM?

VRAM is soldered directly onto the GPU (on its PCB – Printed Circuit Board). It can’t be upgraded or swapped with other modules.

You can check VRAM by using the DirectX Diagnostic tool on your PC. Here are the steps:

-

- Windows + R and typing “dxdiag” then clicking Open to open the DirectX Diagnostic Tool (dxdiag).

-

- In dxdiag window, select the Display tab.

- Under the Device list, scroll down until you find the Display Memory (VRAM) entry.

In this case, we are using an RTX 4090 with 24GB of VRAM.

What use VRAM?

The amount of VRAM that the framebuffer used for monitor display is quite small, around 50MB of VRAM for a 4K HDR image. This is why GPUs designed only for driving displays don’t require large VRAM capacities (Workloads like basic browsing and word processing).

However, GPUs that perform rendering for complex visual tasks require significantly more VRAM. In addition to the framebuffer, they require several data buffers to store textures, lighting, shadows, geometry, and other scene data, which quickly fills up the available VRAM. When you use intensive features such as Ray Tracing, Anti-Aliasing, complex texture maps or working with high-resolution further increases VRAM usage demands.

Essentially, any data that the GPU processes is loaded into VRAM. Depending on the specific rendering workloads, this can involve Data Buffers, Frame Buffers; Textures, Videos, Image sequences; Polygons, Meshes, Geometry; Lights, Light Caches, Ray-Trees, Depth Maps, UV Maps, and Databases.

Does more VRAM improve the rendering performance?

Increasing the VRAM amount can help faster rendering, but only if the original amount of your VRAM was too low.

A GPU uses VRAM the same way as the CPU uses RAM (system memory). When RAM is full, data is stored in a slower page file on your storage (hard disk, SSD). This causes slowed performance and can lead to crashes. The same problem happens with insufficient VRAM. When the VRAM is full, data gets offloaded to RAM instead. However, accessing data from RAM is much slower for the GPU than VRAM. This is due to the RAM’s distance from the graphics processor, and the many connections and smaller busses it has to traverse.

In such cases, increasing VRAM can significantly improve performance because data now can reside entirely in the GPU’s memory, making it easier for the graphics processor to access. However, increasing VRAM means you need to buy a new GPU or use NVLINK.

VRAM capacity is important, but choosing a GPU solely based on VRAM can neglect other vital performance factors. Lower-tier GPUs may be advertised with higher VRAM amounts than the higher-tier GPUs and this sounds attractive. In fact, simply having more VRAM won’t make a less powerful GPU perform better than a higher-tier GPU. The best approach is to understand the specific VRAM and overall performance needs of your workloads, and then choose a GPU that sufficiently meets those requirements within your budget.

Let’s take a closer look at some rendering workloads and their VRAM requirements.

VRAM usage for rendering workloads

CPU Rendering

CPU rendering utilizes the processor’s cores rather than the GPU for processing. As such, there is minimal to no need for a powerful GPU. Let alone one with a significant amount of VRAM, to achieve fast and efficient rendering performance.

For workloads that employ CPU-based render engines such as V-Ray CPU, Corona, or C4D’s Physical Renderer, it is better to focus your budget on purchasing a CPU that has as many cores as possible. It will ensure that you get the best rendering performance.

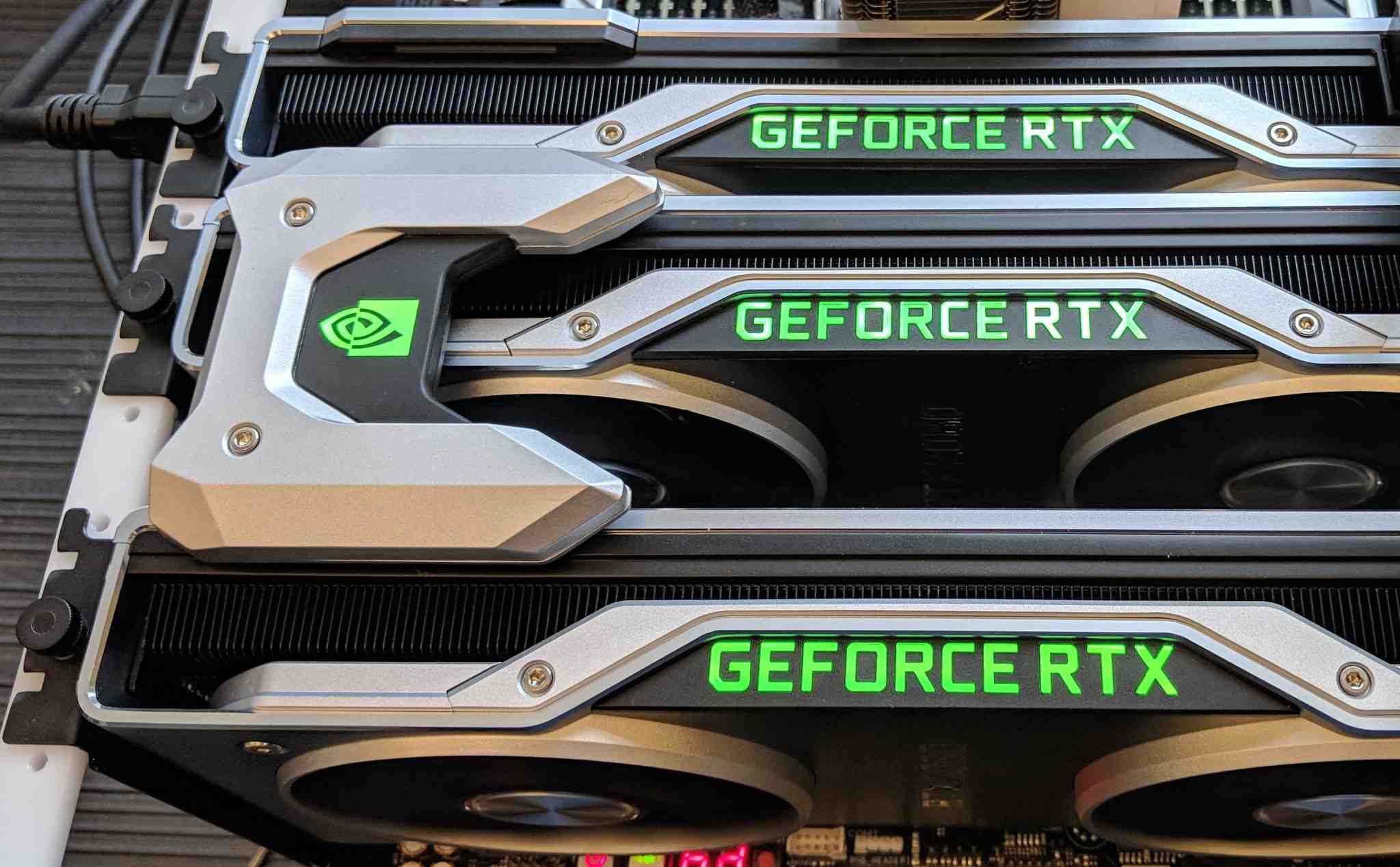

GPU Rendering

GPU rendering performance relies heavily on both the GPU’s processing capabilities and its VRAM capacity. You need to make sure your scene fits within the VRAM for the GPU to operate at its full performance. Most GPU-based rendering engines like Redshift, Octane, and V-Ray show significantly quicker render times with more VRAM allocated, especially for complex 3D scenes with a large number of polygons, high-resolution textures, and complex lighting.

Simple scenes with low poly models and less-resolution textures can often be rendered efficiently using a GPU with 8GB or even 6GB of VRAM. However, for more demanding projects with higher-resolution textures and high polygon counts, it is best to buy a GPU with at least 10GB of VRAM. This ensures the scene fully fits in GPU memory and is not partially stored in the slower system RAM, which can notably reduce speeds.

Additionally, rendering at higher resolutions such as Print-Sized Render-Resolutions, will typically require a GPU with a sizable VRAM. Even for moderately complex scenes, the Render Buffer size grows substantially with increased pixel counts.

For the most complex projects, you will surely need a significant amount of VRAM. A good choice is the RTX 4090 with its 24GB of VRAM. Alternatively, you can choose a professional Quadro GPU like the RTX A6000 for a larger 48GB of VRAM, although you will need to spend a huge amount of money (just) for this huge VRAM capacity, not necessarily processing capability.

Some render engines like Redshift and OctaneRender use “out of core” rendering to get around potential limitations of low VRAM amounts. In Redshift, if the GPU’s VRAM is exceeded, the system RAM will be used instead. While this comes at the expense of performance, some scene data like textures may perform comparably whether stored in VRAM or the system’s RAM. However, this just supports some data types. Also, exceeding the VRAM can cause crashes.

Depending on the software you use, it may be better to invest in a powerful GPU with enough VRAM to avoid any slowdowns.

In summary, for passive 3D workloads such as GPU rendering, the VRAM requirements are:

-

- Baseline: 8 GB of VRAM

- Moderately complex scenes: 10-16GB of VRAM (RTX 3080/RTX 4080)

- Highly complex scenes: 24+GB of VRAM (RTX 3090/RTX 4090 or A6000/A7000) or multiple GPUs

VRAM utilisation on multi-GPU system

Using multiple GPUs in one system can significantly accelerate certain workloads like 3D rendering. However, the available VRAM does not combine or stack when adding more GPUs. The current graphics card and PCIe slot technology do not allow for real-time access to another GPU’s memory without significant latency.

In a typical multi-GPU setup, the workload is divided into identical copies, and each GPU processes its own portion of the data in parallel. This parallel computing results in performance improvement.

Memory combining is possible when connecting GPUs directly through an NVLink bridge. NVIDIA offers the potential for memory pooling through NVLink with some premium GeForce and Quadro cards. However, for this to work, the software used for the workload must support the combined memory of multiple GPUs.

However, relying on NVLink may not always be reliable. With limited software support, it is recommended to buy GPUs with larger individual VRAM capacities, even in multi-GPU configurations. This ensures that each GPU has enough VRAM to handle the workload independently.

iRender provides powerful render machines supporting all software and plugins of any version. Our GPU render farm houses the most robust machines from 1 to 8 RTX 4090/RTX 3090, AMD Threadripper Pro CPUs, 256GB RAM and 2TB SSD storage to boost rendering 3D projects of any scale. Join us and render faster!

iRender provides powerful render machines supporting all software and plugins of any version. Our GPU render farm houses the most robust machines from 1 to 8 RTX 4090/RTX 3090, AMD Threadripper Pro CPUs, 256GB RAM and 2TB SSD storage to boost rendering 3D projects of any scale. Join us and render faster!

Reference source: Nvidia, CG Director

Related Posts

The latest creative news from Cinema 4D Cloud Rendering , Redshift Cloud Rendering, Octane Cloud Rendering, 3D VFX Plugins & Cloud Rendering.