Infrastructure requirements for AI and machine learning

IT owes its existence as a professional discipline to companies seeking a competitive edge from information. Today, organizations are awash in data, but the technology to process and analyze it often struggles to keep up with the deluge of every machine, application and sensor emitting an endless stream of telemetry. An explosion in unstructured data has proved to be particularly challenging for traditional information systems based on structured databases, which has sparked the development of new algorithms based on machine learning and deep learning.

This, in turn, has led to a need for organizations to either buy or build systems and infrastructure for AI and machine learning, deep learning workloads. In today’s article, let’s have an overview of the basic infrastructure requirements to be able to work with AI and machine learning and Deep Learning

Background overview

That’s because the nexus of geometrically expanding unstructured data sets, a surge in machine learning (ML) and deep learning (DL) research, and exponentially more powerful hardware designed to parallelize and accelerate ML and DL workloads have fueled an explosion of interest in enterprise AI applications. IDC predicts AI will become widespread by 2024, used by three-quarters of all organizations, with 20% of workloads and 15% of enterprise infrastructure devoted to AI-based applications.

Enterprises will build many of these applications on the cloud. But the massive amount of data required to train and feed such algorithms, the prohibitive costs of moving data to — and storing it in — the cloud, and the need for real- or near-real-time results, means many enterprise AI systems will be deployed on private, dedicated systems.

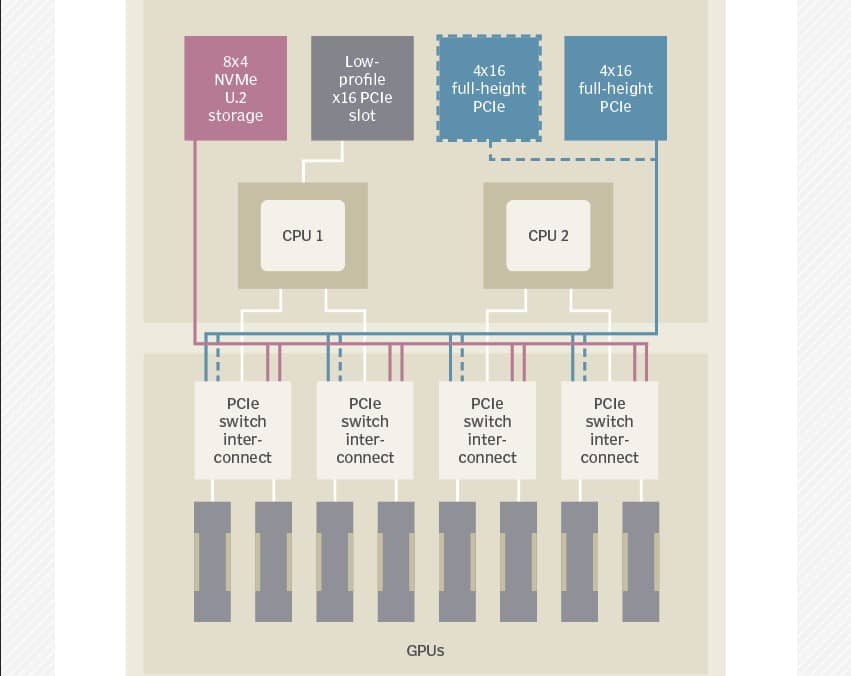

In preparing for an AI-enhanced future, IT must grapple with many architectural and deployment choices. Chief among these is the design and specification of AI-accelerated hardware clusters. One promising option, due to their density, scalability and flexibility, is hyper-converged infrastructure (HCI) systems. While many elements of AI-optimized hardware are highly specialized, the overall design bears a strong resemblance to more ordinary hyper-converged hardware.

Popular infrastructure for machine learning, AI use cases

Most AI systems run on Linux VMs or as Docker containers. Indeed, most popular AI development frameworks and many sample applications are available as prepackaged container images from Nvidia and others. Popular applications include:

- computer vision such as image classification, object detection (in either images or videos), image segmentation and image restoration;

- speech and natural language processing, speech recognition and language translation;

- text-to-speech synthesis;

- recommendation systems that provide ratings and suggested, personalized content or products based on prior user activity and references;

- content analysis, filtering and moderation; and

- pattern recognition and anomaly detection.

These have applications in a wide variety of industries, for example:

- fraud analysis and automated trading systems for financial services companies;

- online retail personalization and product recommendations;

- surveillance systems for physical security firms; and

- geologic analysis for oil, for resource extraction by gas and mining companies.

Some applications, such as anomaly detection for cybersecurity and automation systems for IT operations (AIOps), span industries, with AI-based features being incorporated in various management and monitoring products.

AI applications require massive amounts of storage

There will be a massive storage repository of historical data for AI and ML model training, and a high velocity of incoming data streams for model inference and predictive analytics. Data sets for machine learning and artificial intelligence can reach hundreds of terabytes to petabytes, and are typically unstructured formats like text, images, audio and video, but include semistructured content like web clickstreams and system logs. This makes these data sets suitable for object storage or NAS file systems.

AI requirements and core hardware elements

Machine and deep learning algorithms feed on data. Data selection, collection and preprocessing, such as filtering, categorization and feature extraction, are the primary factors contributing to a model’s accuracy and predictive value. Therefore, data aggregation — consolidating data from multiple sources — and storage are significant elements of AI applications that influence hardware design.

The resources required for data storage and AI computation don’t typically scale in unison. So most system designs decouple the two, with local storage in an AI compute node designed to be large and fast enough to feed the algorithm.

Machine and deep learning algorithms require a massive number of matrix multiplication and accumulation floating-point operations. The algorithms can perform the matrix calculations in parallel, which makes ML and DL similar to the graphics calculations like pixel shading and ray tracing that are greatly accelerated by graphics processor units (GPUs).

However, unlike CGI graphics and imagery, ML and DL calculations often don’t require double-precision (64-bit) or even single-precision (32-bit) accuracy. This allows for a further boost in performance by reducing the number of floating-point bits used in the calculations. So, although early deep learning research used off-the-shelf GPU accelerator cards for nearly the past decade, the leading GPU manufacturer, Nvidia, has built a separate product line of data center GPUs tailored to scientific and AI workloads.

System requirements and components

The system components most critical to AI performance are the following:

- CPU. Responsible for operating the VM or container subsystem, dispatching code to GPUs and handling I/O. Current products use a second-generation Xeon Scalable Platinum or Gold processor, although systems using second-generation (Rome) AMD Epyc CPUs are becoming more popular. Current-generation CPUs have added features that significantly accelerate ML and DL inference operations making them suitable for production AI workloads utilizing models previously trained using GPUs.

- GPU. Handles ML or DL training and (often) inferencing, which is the ability to automatically categorize data based on learning, and is typically a Nvidia P100 (Pascal), V100 (Volta) or A100 (Ampere) GPU for training, and V100, A100 or T4 (Turing) for inference. AMD hasn’t achieved much penetration with system vendors for its Instinct (Vega) GPUs; however, several OEMs now offer products in 1U-4U or Open Compute Project 21-inch form factors.

- Memory. AI operations run from GPU memory, so system memory isn’t usually a bottleneck and servers typically have 128 to 512 GB of DRAM. Current GPUs use embedded high-bandwidth memory (HBM) modules (16 or 32 GB for the Nvidia V100, 40 GB for the A100) that are much faster than conventional DDR4 or GDDR5 DRAM. Thus, a system with 8 GPUs might have an aggregate of 256 GB or 320 GB of HBM for AI operations.

- Network. Because AI systems are often clustered together to scale performance, systems have multiple 10 gbps or higher Ethernet interfaces. Some also include InfiniBand or dedicated GPU (NVLink) interfaces for intracluster communications.

- Storage IOPS. Moving data between the storage and compute subsystems is another performance bottleneck for AI workloads. So most systems use local NVMe drives instead of SATA SSDs.

What iRender - Cloud computing service can provide you?

GPUs have been the workhorse for most AI workloads, and Nvidia has significantly improved their DL performance through features such as Tensor Cores, Multi-instance GPU (to run multiple processes in parallel and NVLink GPU interconnects). However, the increased need for speed and efficiency has spawned a host of new AI processors such as Google’s TPU, Intel Habana AI Processor, Tachyum Universal Processor, Wave AI SoC or field-programmable gate array (FPGA)-based solutions like Microsoft Brainwave, a deep learning platform for AI in the cloud.

Enterprises can use any hyper-converged infrastructure or high-density system for AI by choosing the right configuration and system components. And, one of the choices can be GPU cloud service as iRender. Let’s have a look at iRender’s GPU cloud server packages below

At iRender, we provide a fast, powerful and efficient solution for Deep Learning users with configuration packages from 1 to 6 GPUs RTX 3090 on both Windows and Ubuntu operating systems. In addition, we also have GPU configuration packages from 1 RTX 3090 and 6 x RTX 3090. With the 24/7 professional support service, the powerful, free, and convenient data storage and transferring tool – GPUhub Sync, along with an affordable cost, make your training process more efficient.

Register an account today to experience our service. Or contact us via WhatsApp: (+84) 912 785 500 for advice and support.

Thank you & Happy Training!

Reference source: searchconvergedinfrastructure.techtarget.com

Related Posts

The latest creative news from Cloud Computing for AI,