Is RTX3090 the best GPU for Deep Learning?

Deep Learning is a high computationally demanding field and the training phase really takes time and resource. If the number of your parameters increases, your training time will be longer. That means your resources are being used up longer and you will have to wait and waste your precious time.

Graphics processing units (GPUs) can help reduce these costs as they can help you run your training tasks in parallel, distribute tasks across processor clusters, and execute simultaneous calculation operations. Therefore, you can quickly and efficiently run models with large numbers of parameters.

Today, let’s see the comparison from bizon-tech.com about three GPUs performance to find best GPU for Deep Learning, which are the newest release of 30 series – NVIDIA’s RTX 3090, RTX 3080, and RTX 3070. They have become the most popular and sought-after graphics cards in deep learning in 2022, because of their enormous upgrade from NVIDIA’s 20-series, released in 2018.

Methodology

-

-

-

- Use TensorFlow’s standard “py” benchmark script from the official GitHub (refer here for more details).

- Run tests on the following networks: ResNet-50, ResNet-152, Inception v3, Inception v4, VGG-16.

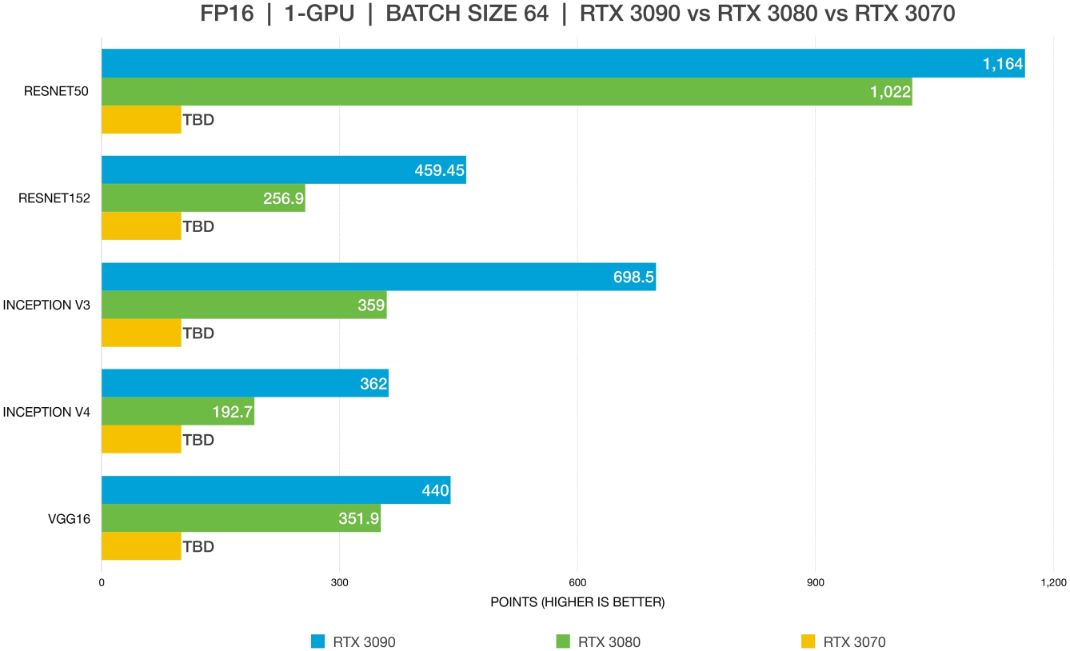

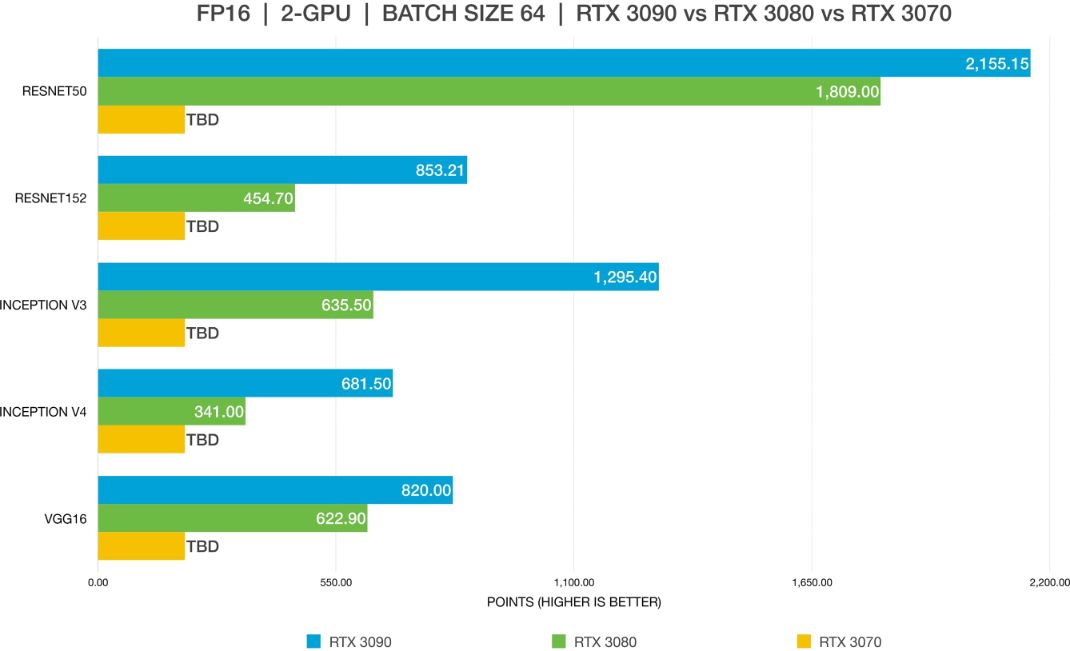

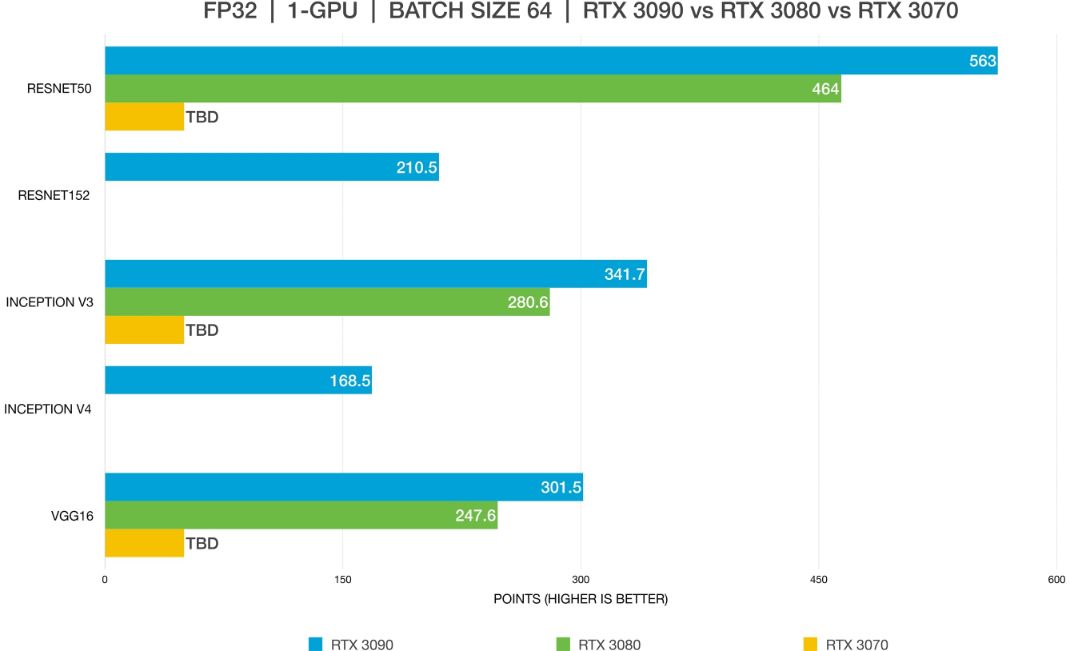

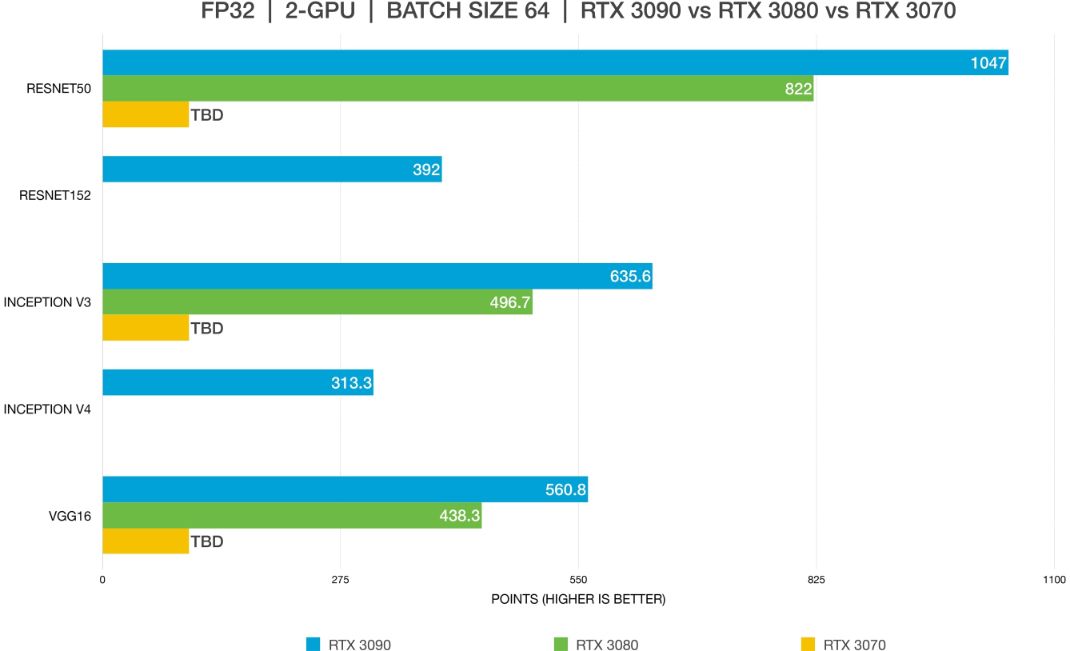

- Compare FP16 to FP32performance and used standard batch sizes (64, in most cases).

- Compare GPU scalingon all 30-series GPUs using up to 2x GPUs and on the A6000 using up to 4x GPUs!

-

-

To accurately compare benchmark data from multiple workstations, Bizon-tech team maintained consistency by having the same driver and framework versions installed on each workstation. It is important to keep a controlled environment in order to have valid, comparable data.

Hardware

Bizon-tech team used 2 workstation for this test.

First one:

-

-

-

- CPU: Intel Core i9-10980XE 18-Core 3.00GHz

- Overclocking: Stage #3 +600 MHz (up to +30% performance)

- Cooling: Liquid Cooling System (CPU; extra stability and low noise)

- Memory: 256 GB (8 x 32 GB) DDR4 3200 MHz

- Operating System: BIZON Z–Stack (Ubuntu 20.04 (Bionic) with preinstalled deep learning frameworks)

- HDD: 1TB PCIe SSD

- Network: 10 GBIT

-

-

Second one:

-

-

-

- CPU: Intel Core i9-10980XE 18-Core 3.00GHz

- Overclocking: Stage #3 +600 MHz (up to + 30% performance)

- Cooling: Custom water-cooling system (CPU + GPUs)

- Memory: 256 GB (8 x 32 GB) DDR4 3200 MHz

- Operating System: BIZON Z–Stack (Ubuntu 20.04 (Bionic) with preinstalled deep learning frameworks)

- HDD: 1TB PCIe SSD

- Network: 10 GBIT

-

-

Software

Deep Learning Models:

-

-

-

- Resnet50

- Resnet152

- Inception V3

- Inception V4

- VGG16

-

-

Drivers and Batch Size:

-

-

-

- Nvidia Driver: 455

- CUDA: 11.1

- TensorFlow: 1.x

- Batch size: 64

-

-

Benchmarks

Conclusion

The RTX 3090 is the best if you want excellent performance. The RTX 3090 is the only GPU model in the 30-series capable of scaling with an NVLink bridge. When used as a pair with an NVLink bridge, one effectively has 48 GB of memory to train large models.

RTX 3080 is also an excellent GPU for deep learning. However, it has one limitation which is VRAM size. Training on RTX 3080 will require small batch sizes, so those with larger models may not be able to train them.

RTX 3070, when compare to RTX3090 and RTX3080, is not really a good choice (performance is really low). And it’s like RTX3080, has limitation in VRAM size. Training on RTX 3070 will require even smaller batch sizes.

For most users, the RTX 3090 or the RTX 3080 will provide the best performance. The only limitation of the 3080 is its 10 GB VRAM size. Working with a large batch size allows models to train faster and more accurately, saving a lot of time. With the latest generation, this is only possible with the A6000 or RTX 3090. Using FP16 allows models to fit in GPUs with insufficient VRAM. In charts #3 and #4, the RTX 3080 cannot fit models on Resnet-152 and inception-4 using FP32. Once change to FP16, the model can fit perfectly. 24 GB of VRAM on the RTX 3090 is more than enough for most use cases, allowing space for almost any model and large batch sizes.

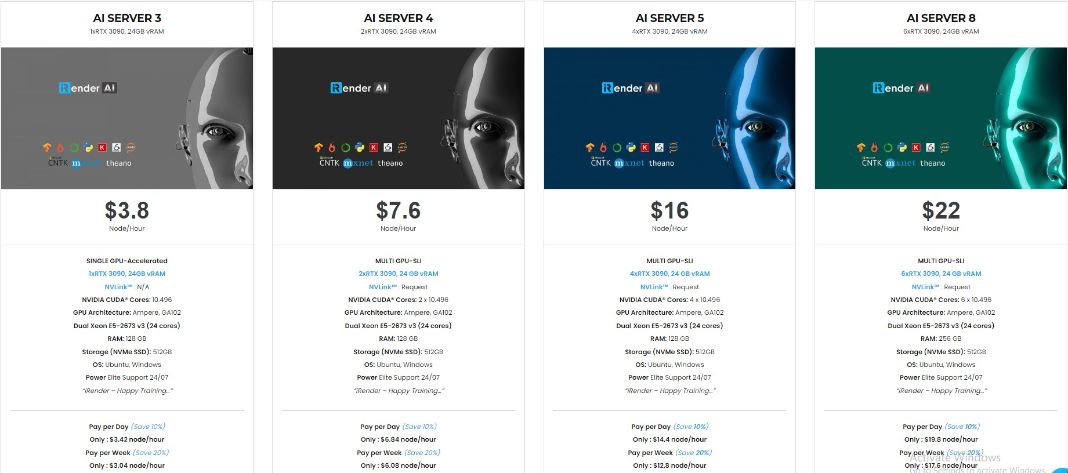

iRender is currently providing GPU Cloud for AI/DL service so that users can train their models. With our high configuration and performance machines (RTX3090), you can install any software you need for your demands. Just a few clicks, you are able to get access to our machine and take full control of it. Your model training will speed up times faster.

Moreover than that, we provide other features like NVLink if you need more VRAM, Gpuhub Sync to transfer and sync files faster, Fixed Rental feature to save credits from 10-20% compared to hourly rental (10% for daily rental, 20% for weekly and monthly rental).

Register an account today to experience our service. Or contact us via WhatsApp: (+84) 916806116 for advice and support.

Thank you & Happy Training!

Source:bizon-tech.com

Related Posts

The latest creative news from Cloud Computing for AI,