How Redshift leverages hardware: GPU, CPU & Memory requirements explained

To optimize Redshift’s performance, it’s important to understand how it leverages different hardware components. While the GPU plays the primary role as Redshift is mainly a GPU renderer, certain preprocessing stages depend on the CPU, disk, and network such as extracting meshes, loading textures, and preparing scenes. In this article, we will examine “How Redshift leverages hardware: GPU, CPU & Memory” to provide clarity on specifications for this renowned renderer.

GPU

Currently, Redshift supports NVIDIA GPUs on Windows and Linux. It also supports AMD GPUs on Windows, macOS BigSur, or later.

NVIDIA GPUs (for Windows and Linux)

From NVIDIA GPUs, it’s recommended the last-gen GeForce RTX 3060 Ti, RTX 3070, RTX 3080, RTX 3090, and the current-gen GeForce RTX 4090. Among the professional GPUs, it’s the last-gen Quadro RTX 5000 and Quadro RTX 6000, or the current-gen Quadro RTX A6000. Please note that there are almost no performance differences between GeForce and Quadro GPUs for Redshift rendering. The Quadros can typically render OpenGL viewports faster than the GeForce, but it does not affect Redshift’s rendering performance. Quadros’ main benefit over GeForce is that they often have more onboard video memory (VRAM), which could be important for rendering very large scenes.

Redshift allows the mixing of GeForce and Quadro GPUs on one computer.

An important difference between GeForce GPUs and Titan/Quadro/Tesla GPUs is the TCC driver availability. TCC stands for “Tesla Compute Cluster” – a special Nvidia driver for Windows that bypasses the Windows Display Driver Model (WDDM) and allows faster communication between the GPU and CPU. The downside of TCC is that once enabled, the GPU becomes “invisible” to Windows and 3D apps like Maya, Houdini, etc and only works with CUDA apps like Redshift. Only Quadro, Tesla, and Titan GPUs support TCC. GeForce GTX cards cannot be used it. TCC is only useful for Windows – Linux does not need it as the Linux display driver does not have the communication latency issues of WDDM on Windows. In other words, CPU-GPU communication is faster by default on Linux than Windows (with WDDM) across all NVIDIA GPUs including GeForce and Quadro/Tesla/Titan GPUs.

Although GeForce GPUs lack TCC support, you can still get some of its latency benefits on Windows by enabling “Hardware-accelerated GPU scheduling”. This feature was introduced in a 2020 Windows 10 update along with compatible NVIDIA drivers. Please refer to this guide on enabling it.

AMD GPUs (for Windows, macOS Big Sur or later)

Currently, AMD GPUs are supported on Windows, macOS Big Sur, or later. Please see at the bottom of this page for a list of supported AMD GPUs.

Multiple GPUs

Redshift supports a maximum of 8 GPUs per session. It will render faster when you install multiple GPUs on the same computer. However, using multiple GPUs may require a specialized motherboard, CPU, or system configuration.

VRAM

GPUs come with different amounts of video memory (VRAM) such as 8GB, 11GB, 12GB, 16GB, 24GB, or 48GB. So how does VRAM affect the performance, how much VRAM is enough, and which GPU you should buy for your Redshift? Let’s deep dive into how Redshift uses VRAM so that you can make the right decision when choosing a GPU.

For Redshift and other GPU renderers, the general rule is that more VRAM is better. However, GPUs with higher VRAM are more expensive.

Redshift is efficient with VRAM utilization. It can fit around 20-30 million unique triangles within approximately 1GB of VRAM. A scene with 300 million triangles would typically need around 10GB of VRAM. However, GPUs with 8GB VRAM can still render such high poly scenes due to Redshift’s “out-of-core” technique, which helps processing exceed the VRAM limit. Nevertheless, excessive out-of-core data access can negatively impact performance. Therefore, it is better to have plenty of available VRAM for rendering high polygon scenes.

Redshift’s out-of-core technique does not work for all data types. It currently cannot store volume grids like OpenVDB in an out-of-core manner. Scenes using many hundreds of megabytes of OpenVDB may require a GPU with more VRAM, otherwise rendering will fail.

Having more VRAM also allows for running multiple GPU apps simultaneously. Apps like Maya OpenGL, browsers, and Windows itself can use significant VRAM, leaving little for Redshift. This is less of an issue on GPUs with abundant VRAM. Alternatively, users can get an extra cheaper GPU just for other apps, freeing up full VRAM on remaining GPU(s) for Redshift rendering.

VRAM capacity often determines whether to choose an expensive 24 GB GPU or a cheaper 11, 12GB option. The VRAM of multiple GPUs also does not combine. For example, when you have one 12GB GPU and a 24GB GPU installed on your computer, this doesn’t add up to 36GB. Each GPU uses its own VRAM unless they are linked together with NVLink, which can “bridge” and allow memory sharing at some performance cost. Redshift can detect and utilize NVLink if available.

To summarize:

-

-

- If you need more VRAM, Titan/Quadro/Tesla NVIDIA GPUs or Radeon Pro AMD GPUs are better choices. If you don’t need extra VRAM, multiple cheaper consumer GPUs can provide more processing power for the same cost, leading to faster render times compared to a single high-end GPU.

- If you are not going to use an extra GPU for OpenGL or 2D rendering, or you want to render heavy (150+ million scenes or lots of OpenVDB or particles) you should choose a GPU with more VRAM.

-

CPU

While Redshift mainly relies on GPU performance for rendering, certain steps depend on the CPU performance, along with disk or network as well. These include extracting mesh data from 3D applications, loading textures from disk, and preparing scene data for the GPU. For complex scenes, these steps can take significant time, so a lower-end CPU may slow down or “bottleneck” the overall rendering performance.

Redshift performs better with CPUs that have strong single-threaded performance. A CPU with fewer cores but more GHz is better than the one with more cores and low GHz. For example, a 6-core CPU running at 3.5GHz will work better than an 8-core CPU at 2.5GHz. Maxon recommends CPUs with operating frequencies of 3.5GHz or higher.

Not all CPUs can drive 4 GPUs at the highest PCIe x16 speed. CPUs have “PCIe lanes” determining data transfer speeds between the CPU and GPU. Some CPUs have fewer lanes than others, this reduces maximum GPU support and speeds. For example, the Core i7-5820K 3.3GHz has 28 lanes, while the Core i7-5930K 3.5GHz has 40 lanes, and the 5930K can drive more GPUs at a higher speed. If you are going to install multi-GPU for your render system, we recommend CPUs with more PCIe lanes. Lower-end CPUs like Core i5, Core i3, and lower are not recommended.

With multiple CPUs in one motherboard (e.g. Xeons), PCIe lanes combine letting dual-Xeon systems easily power 8 GPUs at full speed.

In summary, Redshift prioritizes GHz over the number of cores. For multiple GPUs, you should consider a high-end Core i7 or AMD CPU. If you want to set up a multi-GPU system (over 4 GPUs), you can consider the dual-Xeon options. And try to avoid Core i5, i3 and low-end CPUs.

RAM (Memory)

You should have at least twice as much CPU memory (RAM) as the largest amount of VRAM in the installed GPUs. For example, if you use one or more Titan X GPUs with 12GB VRAM, the system should have at least 24GB of RAM.

If you render multiple frames simultaneously, the RAM needs to be multiplied accordingly. For example, if rendering one frame needs 16GB RAM, rendering two frames at once will require around 32GB RAM.

It is recommended to have plenty of system RAM if you will be installing multiple GPUs on one computer.

Disk (Storage)

You should use fast solid-state drives (SSDs) for Redshift. Redshift automatically converts textures like JPG, EXR, PNG and TIFF to its texture format. This format is faster for loading and using during rendering. The textures are stored in a local folder. Therefore, using an SSD for the texture cache folder ensures the converted texture files can be opened quickly during rendering.

Alternatively, Redshift can open textures directly from their original locations (even if it is a network folder) without caching, but we do not recommend this approach.

Multiple GPU scaling in Redshift

Redshift is a famous multiple GPU renderer, almost all users want to speed up rendering with multiple GPUs and think that it will help render that multiple times faster. Yet, Redshift doesn’t scale perfectly across multiple GPUs. Rendering with 4 GPUs does not necessarily result in 4 times faster compared to rendering on a single GPU.

When rendering with multiple GPUs in Redshift, there are two options you can choose:

-

-

- Rendering a single frame using all GPUs together

- Rendering multiple frames at once using a combination of GPUs

-

In some cases, rendering a single frame with all the system’s GPUs may not result in a perfect linear scaling of performance with GPU count. For example, 4 GPUs might not render exactly 4 times faster than rendering with 1 GPU. It can be just about 3 times faster. The reason behind this is that there’s a certain amount of per-frame CPU processing involved which cannot be sped up by adding extra GPUs.

To better illustrate this, consider the following example.

Suppose extracting scene data from Maya takes 10 seconds on the CPU, and rendering with 1 GPU takes 60 seconds. The total rendering time is 70 seconds.

Now with 4 GPUs, the pure 60 seconds rendering time would be divided by 4, taking 15 seconds. But the 10-second extraction still happens on the CPU alone, so it remains the same. The total time would be 10 + 15 = 25 seconds, which is 3 times faster than the original 70 seconds, not 4 times faster as might be expected.

The solution to the above problem is to render multiple frames simultaneously. If you have a computer with 4 GPUs, render 2 frames at a time, with each frame using 2 GPUs. This forces more parallel CPU work by extracting multiple frames at once, which quite often helps improve the CPU-GPU performance ratio.

There are some render managers to support the Redshift feature out-of-the-box, such as Deadline. Deadline supports rendering multiple frames across GPU subsets using a feature called “GPU affinity”. Alternatively, if you don’t use a render manager and want to use your own batch render scripts, you can render from the command line and use a subset of GPUs.

In summary, to maximize the speedup from multiple GPUs, Redshift works best when rendering multiple frames in parallel across the GPUs.

In closing, we have seen that Redshift relies on GPUs for rendering, but also utilizes the CPU, memory and other hardware for preprocessing tasks. While powerful GPUs take the lead, ensuring your CPU, RAM and other systems meet Redshift’s specifications is key to smoothing preprocessing workflows. With the definitive requirements covered in this guide, we hope you can make savvier choices balancing your configuration to minimize bottlenecks and boost your Redshift rendering productivity.

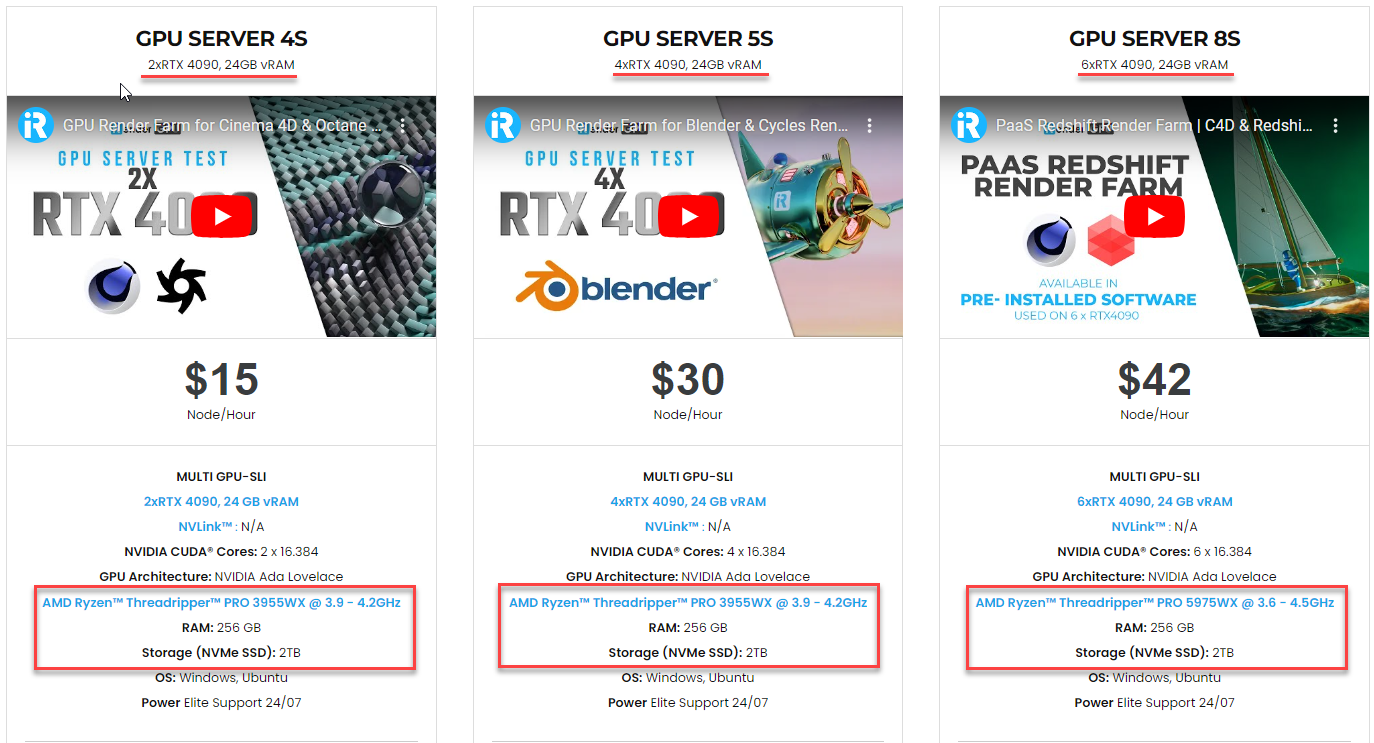

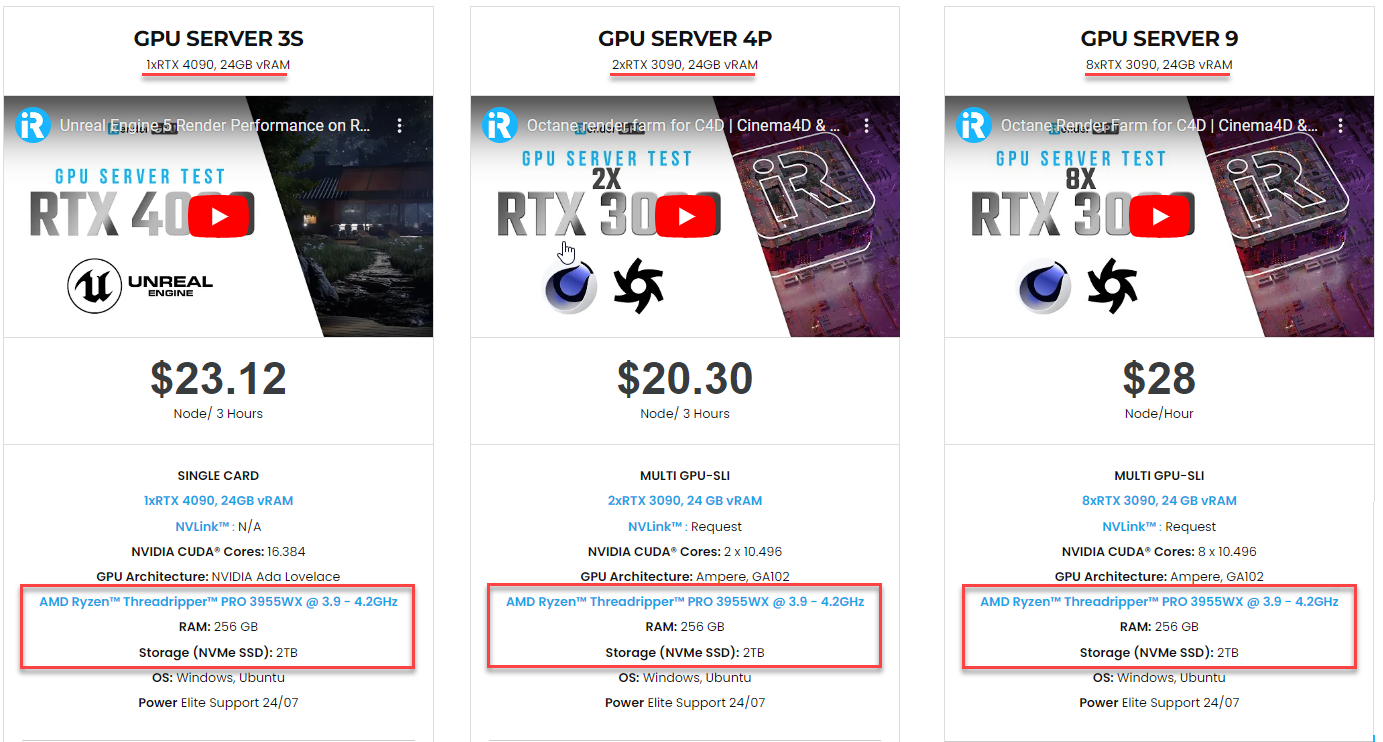

iRender - The Best Render Farm For Redshift Rendering

iRender powers up your creativity with unlimited GPU rendering resources. Our GPU render farm houses the most powerful 3D rendering machines. Configure from 1 to 8 GPU with top-tier RTX 4090/RTX 3090, Threadripper Pro CPUs, 256GB RAM and 2TB SSD storage – iRender’s machines are capable of any 3D project demands.

Once renting our machines, you will own them as your personal workstations. Therefore, you can install and use C4D, Redshift, plugins, and/or any other 3D software of any version (even the newly released ones).

As an official partner of Maxon, we currently provide pre-installed C4D and Redshift machines to streamline your pipeline further! Let’s explore how to use our C4D and Redshift machines through our Desktop app.

New user incentives

This November, we are offering an attractive 100% Bonus Program for our new users making the first deposit within 24 hours of registration.

Enjoy our FREE TRIAL to try our RTX 4090 machines and boost your Redshift rendering now!

For further information, please do not hesitate to reach us at [email protected] or mobile: +84915875500.

iRender – Thank you & Happy Rendering!

Reference source: maxon.net

Related Posts

The latest creative news from Redshift Cloud Rendering.