NVIDIA Releases Omniverse Audio2Face

Omniverse Audio2Face beta simplifies animation of a 3D character to match any voice-over track, whether you’re animating characters for a game, film, real-time digital assistants, or just for fun. You can use the app for interactive real-time applications or as a traditional facial animation authoring tool. Run the results live or bake them out, it’s up to you.

With iRender team, you can learn more about Omniverse Audio2Face in this article.

How does Omniverse Audio2Face work?

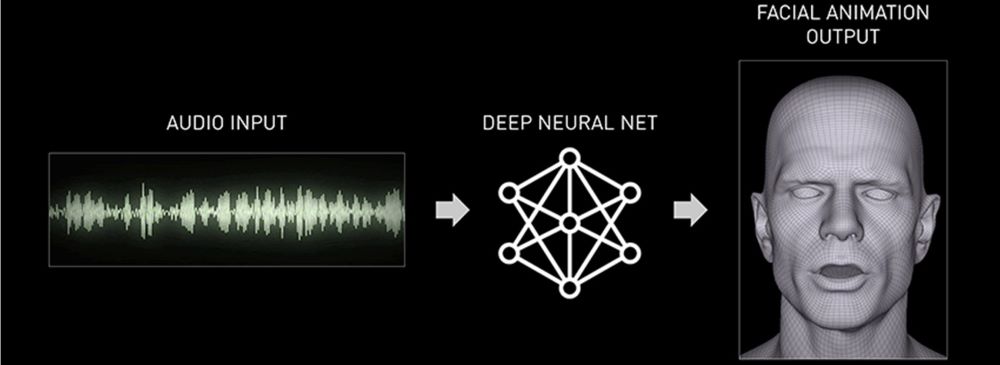

Audio2Face is preloaded with “Digital Mark”— a 3D character model that can be animated with your audio track, so getting started is simple—just select your audio and upload. The audio input is then fed into a pre-trained Deep Neural Network. In addition, the output drives the 3D vertices of your character mesh to create the facial animation in real-time. You also have the option to edit various post-processing parameters to edit the performance of your character. The results you see on this page are mostly raw outputs from Audio2Face with little to no post-processing parameters edited.

List highlight features:

- Audio input: Use a recording or animate live

- Character transfer: Face-swap in an instant

- Multiple instances: Express yourself – or everyone at once

- Data conversion: Connect and convert

- Emotion control: bring the drama

Explore in detail about features of Omniverse Audio2Face here.

NVIDIA Omniverse Audio2Face Available in Open Beta

On Apr 12th, 2021, NVIDIA Omniverse Audio2Face is available, in open beta. With the Audio2Face app, Omniverse users can generate AI-driven facial animation from audio sources.

The demand for digital humans is increasing across industries, from game development and visual effects to conversational AI and healthcare. But the animation process is tedious, manual, and complex, plus existing tools and technologies can be difficult to use or implement into existing workflows.

With Omniverse Audio2Face, anyone can now create realistic facial expressions and emotions to match any voice-over track. The technology feeds the audio input into a pre-trained Deep Neural Network, based on NVIDIA and the output of the network drives the facial animation of 3D characters in real-time.

The open beta release includes:

- Audio player and recorder: record and playback vocal audio tracks, then input the file to the neural network for immediate animation results.

- Live mode: use a microphone to drive Audio2Face in real-time.

- Character transfer: retarget generated motions to any 3D character’s face, whether realistic or stylized.

- Multiple instances: run multiple instances of Audio2Face with multiple characters in the same scene.

The updated version of Omniverse Audio2Face

NVIDIA has released an update for its experimental AI-powered software for generating facial animation from audio sources Omniverse Audio2Face. The newly revealed version – Audio2Face 2021.3.2 – brings two great new features that many would find interesting.

The first feature is the new BlendShape Generation tool, which allows the user to generate a set of BlendShapes from a Neutral Head mesh. All one needs to do is to connect a character to Audio2Face using the Character Transfer process, the tool will do the rest.

The second feature that was added in this update is the Streaming Audio Player that allows the streaming of audio data from external sources such as Text-to-Speech applications via the gRPC protocol.

You can learn more about the update here.

Powerful servers to boost performance in Omniverse

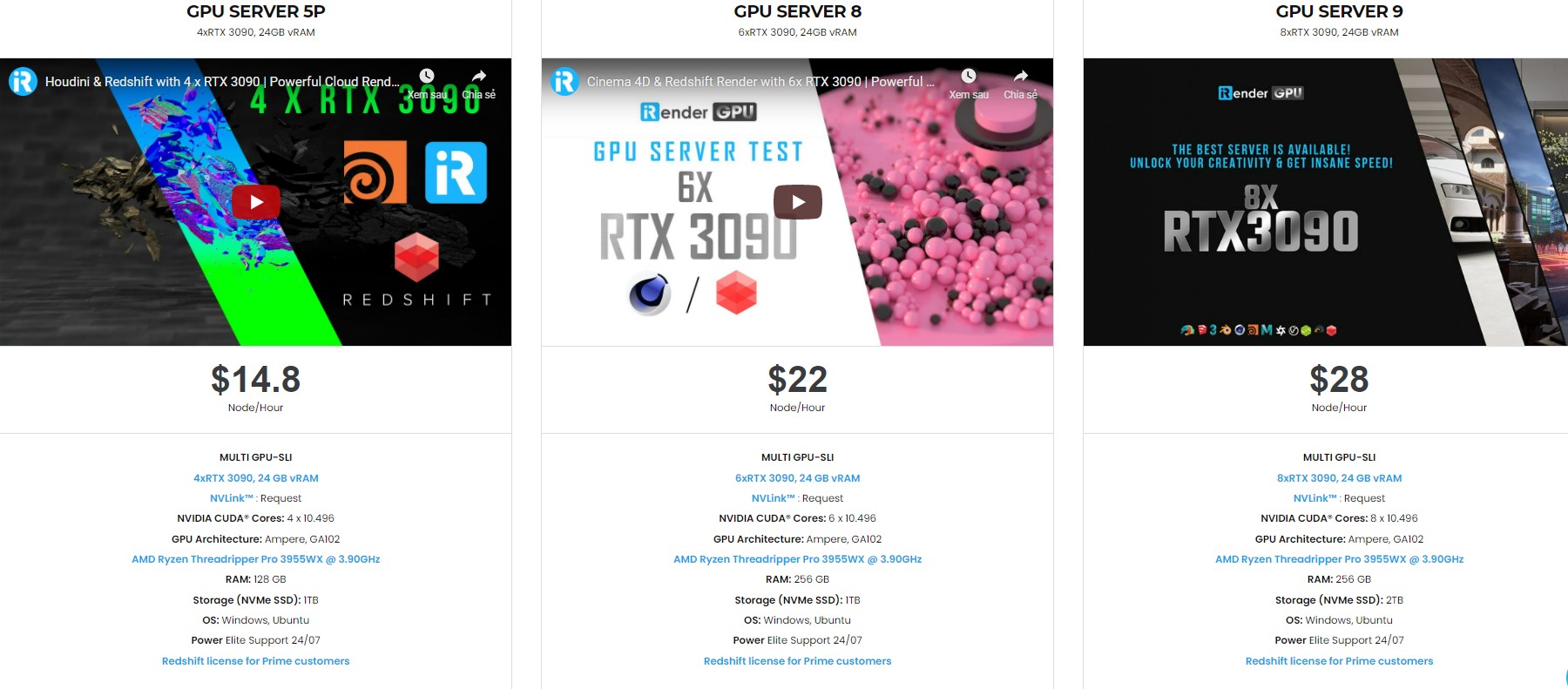

iRender is an appropriate and effective service for special software such as iClone Connector and NVIDIA Omniverse. We provide high-performance servers for GPU-based engine rendering through the Remote Desktop application.

In addtition, we are releasing new servers for iRender’s users, which have powerful processing capabilities of AMD Ryzen Threadripper Pro 3955WX @ 3.90GHz. Besides, they have multi-GPUs: 2/ 4/ 6/ 8 RTX 3090 24GB VRAM to boost performance in the rendering process.

iRender’s workflow is simple and easy. You need to create an image and then boot a system. Next, you connect to our remote servers and take full control of our machines. There you can install any software that you want.

We not only have powerful configurations, but also we have excellent service with a great support team. In addition, we want to bring a comfortable and helpful feeling to all customers. They will always be satisfied when they use our service. I would like to sponsor hours rendering on power servers for you if you CREATE AN ACCOUNT right now and contact via email: [email protected] or Whatsapp/Telegram: +84 0394000881.

Source and images: developer.nvidia.com; 80.lv

Related Posts

The latest creative news from Omniverse Cloud Rendering, Daz3d Cloud Rendering, Redshift Cloud Rendering, Nvidia Iray Cloud Rendering , Octane Cloud Rendering, 3D VFX Plugins & Cloud Rendering.