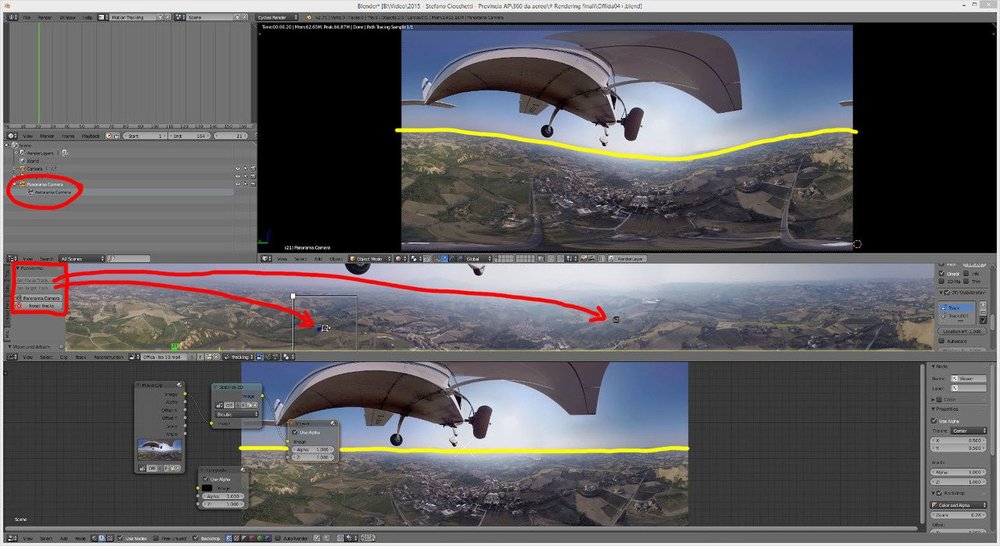

Blender Camera Tracking Tutorial with iRender Cloud Rendering

In terms of post-production or video effects, camera tracking is the process of recreating the camera path taken by the actual real-life camera. Importing a 3D model and putting it on top of the footage won’t look real nor very good, as the ground in your video would be moving and you would have to animate the model frame by frame to follow (unless you had the camera perfectly still). Using Blender’s 2.8 camera tracking feature, you can do this in a much easier. In Blog’s Today, you’ll learn how to use Blender camera tracking to extract the camera’s motion from the footage and create a digital scene within your video.

Why should we use Blender Camera Tracking?

Blender camera tracking can be done a number of ways and for a variety of purposes. The following are some common use cases:

- Stabilizing footage: You can tell Blender to stabilize or center footage with a few given markers.

- Placing 3D models into the scene: This is the most common use case after setting up 3D tracking for a video in Blender.

- Placing effects or 2D models: As with 3D models, you can place effect layers or 2D drawings into a video, making them look as if they were really in the original footage.

Now, let’s have a look at how to set up Blender camera tracking.

Step 1: Preparing the Footage

First of all, you will need video footage to work on. You can do this by grabbing your phone and recording a small video clip, no longer than 10 seconds. It can be a room or an outer space, such as a garden, street, or anything similar. Make sure you have good lighting and that the footage isn’t too shaky, as this will make things harder. It’s also recommended to record at a higher resolution, if possible, as this will really help us in the next steps.

After you’ve taken the video, transfer it into your PC, check that it looks good, and make sure it’s not blurry or too shaky. Lastly, we will need to convert the video into an image sequence. To do this, go to “Video Sequencer” and click “Add” to import the video footage you just took. Next, click the output tab on the right menu, and under “Output” choose “PNG” as the file format. Make sure you specify a folder where all the images should be saved in. On the top left, click “Render” and “Render animation”. Of course, rendering can be done with other software; just make sure you aren’t losing image quality.

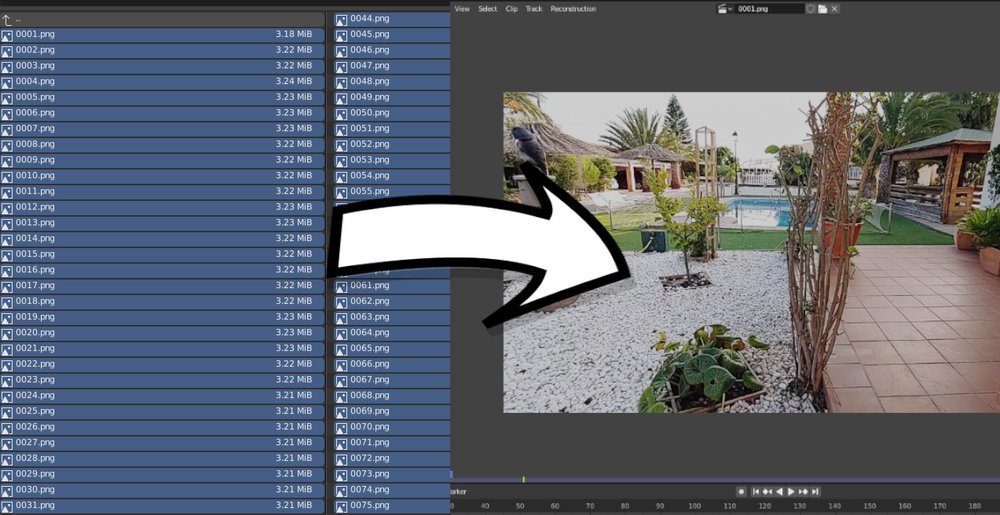

Step 2: Importing the Image Sequence to Blender

Once your video is a pile of images, open a new instance of Blender. Go into “Movie Clip Editor” and at the top, click “Open”, selecting all the images from your video. You can do this by either selecting them one-by-one or pressing ‘A’ on your keyboard (if the images are in their own file). You should now see how the video can be played inside Blender, but it will probably go quite slow. To solve this, click the “Prefetch” button and “Set Scene Frames” so that the frames specified in Blender match the total number of video frames.

Step 3: Adding Tracking Markers

With the footage ready, it’s time to add tracking markers into our footage. Before we add any markers, we need to tweak some small settings under the “Tracking Settings” menu:

- Where it says “Motion Model”, change “Loc” to “Affine”.

- Make sure to check the “Normalize” box.

- Under the “Tracking Setting Extra” submenu, change the “Correlation” value from 0.75 to 0.9.

You’ll need to add at least eight markers, preferably on areas of the footage which are easy to track, such as objects with high contrast, like white stones on a dark floor, a golf ball on grass, or specific patterns in a tiled floor. Although not compulsory, we recommend you start placing the trackers in the first frame. To add these trackers, hold CTRL and right-click. Each time you do this, a small white square should appear; use the mouse to place it on the part of the footage you want to track. Keep doing so until you have at least eight visible trackers in your scene. You can also scale them up by pressing ‘S’ on your keyboard.

Step 4: Tracking the Footage

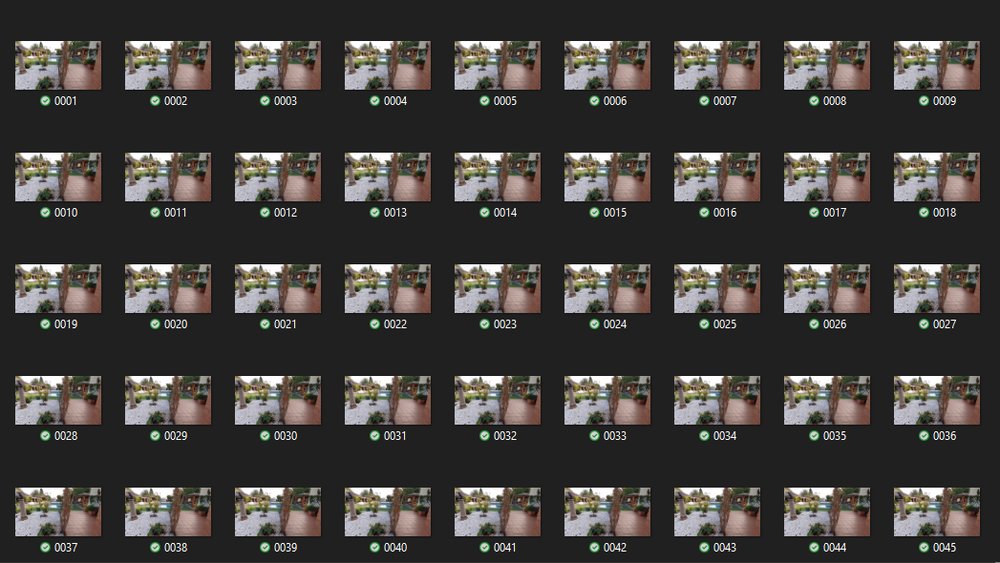

Once you’ve placed all the tracking markers, it’s time to actually start tracking the footage. There are two common ways of doing this, either press “Alt + Right Arrow” to track one frame forwards or press “Ctrl + T” to track the entire footage. Make sure you have all of your markers selected before doing so. If everything goes well, all the markers should start tracking the part of the footage you placed them on and stay there until the end of the video.

There may be marks that go red and stop tracking. Don’t worry – this is quite common, especially if the footage is hard to track. To fix this, select the markers that stopped tracking halfway through and resize them a bit. This will create a new keyframe for the tracker, which should help to properly track the footage. Repeat this process for all the markers that didn’t track throughout the entire footage. Note that you may need to resize them more than once. One last thing to keep in mind: If upon doing this step, a marker still doesn’t want to keep tracking, leave it alone and add another. Not all markers will work perfectly, especially if the footage is blurry.

Step 5: Solving the Camera

With at least eight markers properly tracking our footage, it’s time to “solve” the camera motion. In other words, we’ll have the program calculate its approximate movement.

- Go to the “Solve” tab located on the left section.

- Check the “Keyframe” box.

- Next to “Refine”, change “Nothing” to “Focal Length, Optical Center, K1, K2”.

- Click the “Solve Camera Motion” button.

If everything went well, Blender will now have created a very similar camera trajectory to the one that recorded the real footage. You’ll get an “average error” in the top right displayed as some numbers. We advise keeping this average error below 0.5 for good results. To do this, click the “Clip Display” tab, also located on the top right, and enable the “Info” box. This should allow you to see the average error for every individual tracker you placed in the footage. The aim is to delete the markers with an extremely high average error or lower their “weight” by going to the “Track” menu while the tracker is selected and reducing the “Weight” slider to a lower value of around 0.6.

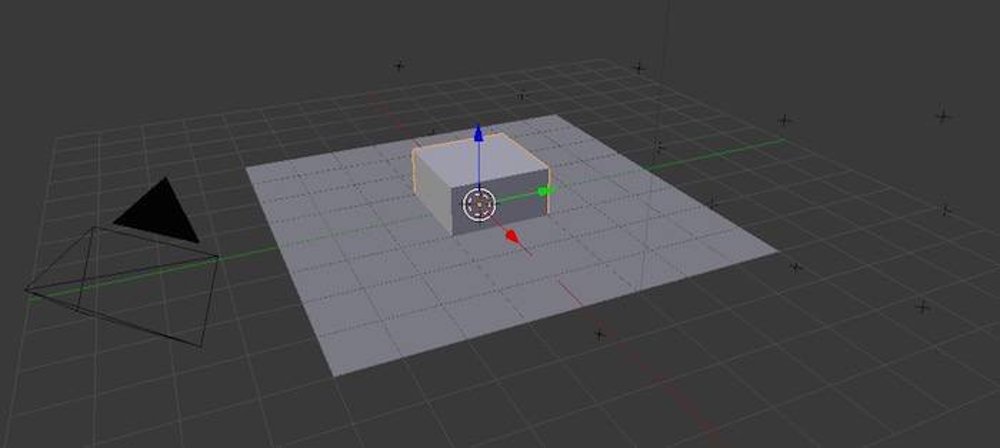

Step 6: Setting the 3D Scene

Once you’re satisfied with your tracked footage, go to the “Scene Setup” menu located to the left and click “Setup Tracking Scene”. If you now go back into the 3D viewport and to the camera view, you’ll see how the default cube and a flat plane will move together with the video background of the footage you tracked. However, there are still a few final steps to make it look good.

Choose one tracker to be the origin, another tracker above that to be the Y-axis, and one to the side of the origin tracker to be the X-axis. Choose three trackers which more or less make the floor of your scene and click “Floor”. Finally, choose two trackers and set an appropriate scale for them. With all of this done, you should now have a proper 3D camera solution for your scene. That means you can now proceed to put a 3D model anywhere in your 3D viewport.

This is all about Blender camera tracking tutorial. We hope this article useful for your work!

The Professional Cloud Rendering Service For Blender

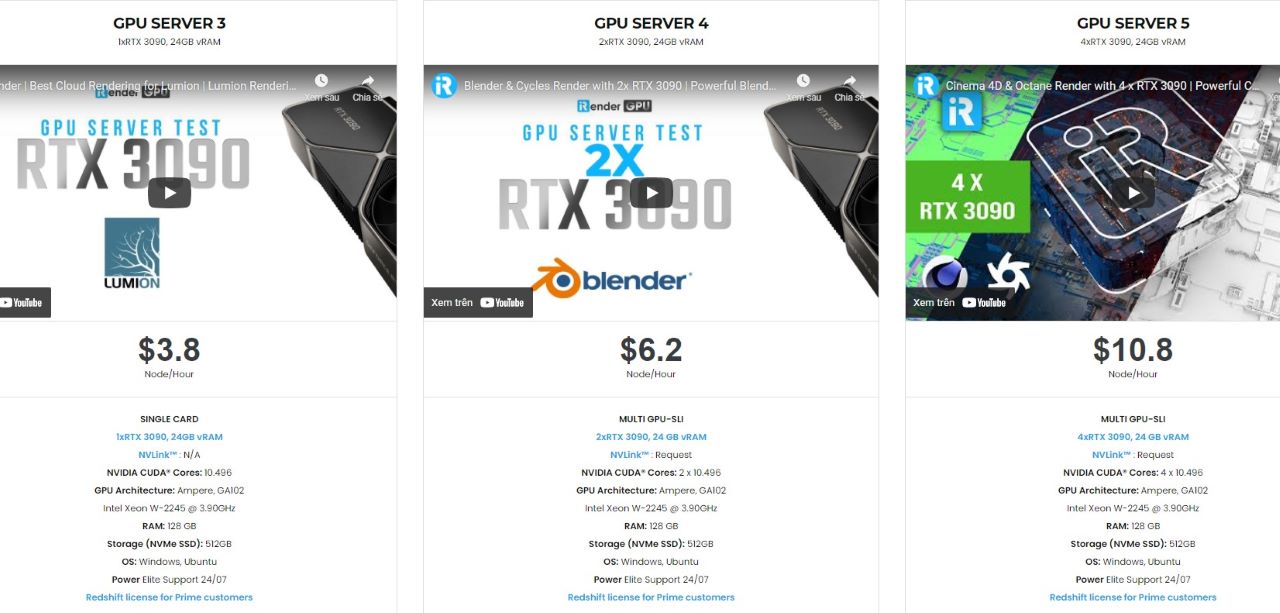

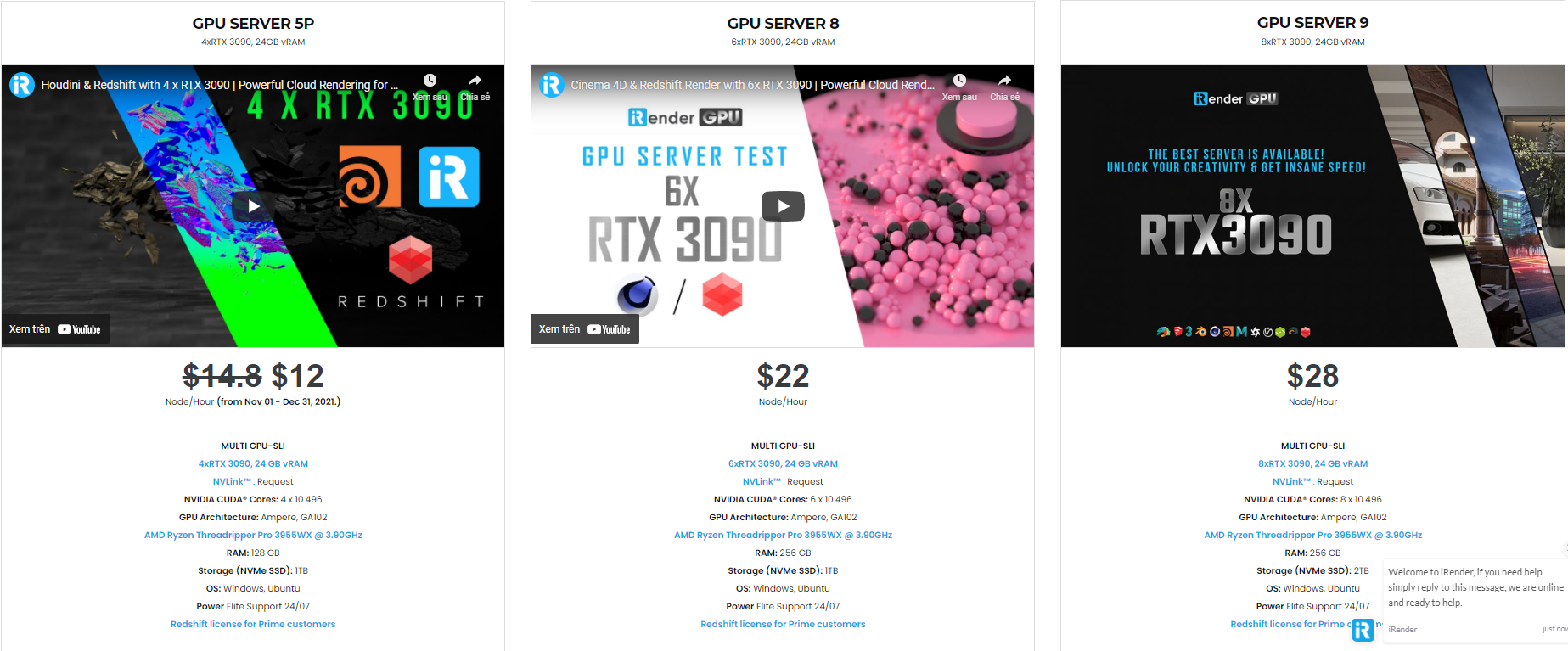

iRender is a Professional GPU-Acceleration Cloud Rendering Service provider in HPC optimization for rendering tasks, CGI, VFX with over 20.000 customers and being appreciated in many global rankings (e.g. CGDirector, Lumion Official, Radarrender, InspirationTuts CAD, All3DP). We are proud that we are one of the few render farms that support all software and all versions. Users will remotely connect to our server, install their software only one time and easily do any intensive tasks like using their local computers. Blender users can easily choose their machine configuration from recommended system requirements to high-end options, which suit all your project demands and will speed up your rendering process many times.

High-end hardware configuration

- Single and Multi-GPU servers: 1/2/4/6/8x RTX 3090/3080/2080Ti. Especially, NVIDIA RTX 3090 – the most powerful graphic card.

- 10/24 GB vRAM capacity, fitting to the heaviest images and scenes. NVLink/SLI requested for bigger vRAM.

- A RAM capacity of 128/256 GB.

- Storage (NVMe SSD): 512GB/1TB.

- Intel Xeon W-2245 or AMD Ryzen Threadripper Pro 3955WX CPU with a high clock speed of 3.90GHz.

- Additionally, iRender provide NVLink (Request) will help you increase the amount of VRAM to 48GB. This is a technology co-developed by Nvidia and IBM with the aim of expanding the data bandwidth between the GPU and CPU 5 to 12 times faster than the PCI Express interface. These servers are sure to satisfy Blender artists/ studios with very complex and large scenes.

Let’s see rendering tests with Blender on multi-GPU at iRender:

- Reasonable price

iRender provides high-end configuration at a reasonable price. You can see that iRender’s package always costs much cheaper than packages of other render farms (in performance/price ratio). Moreover, iRender’s clients will enjoy many attractive promotions and discounts. For example, this October, we offer a promotion for new users and discounted prices for many servers, users will take advantage of these to level up their renders.

SPECIAL OFFER for this December: Get 20% BONUS for all newly registered users.

If you have any questions, please do not hesitate to reach us via Whatsapp: +(84) 916017116. Register an ACCOUNT today and get FREE COUPON to experience our service. Or contact us via WhatsApp: +(84) 916017116 for advice and support.

Thank you & Happy Rendering!

Source: all3dp.com

Related Posts

The latest creative news from Blender Cloud Rendering.